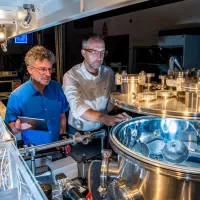

Research institute

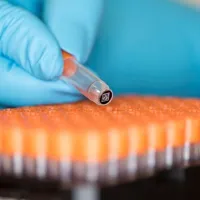

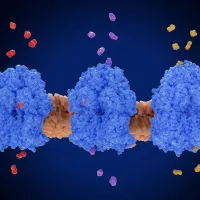

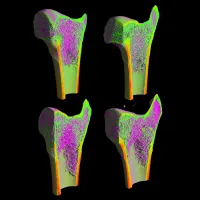

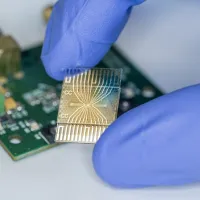

Institute for Life Sciences

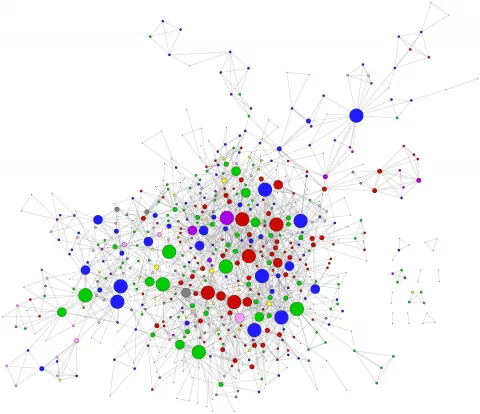

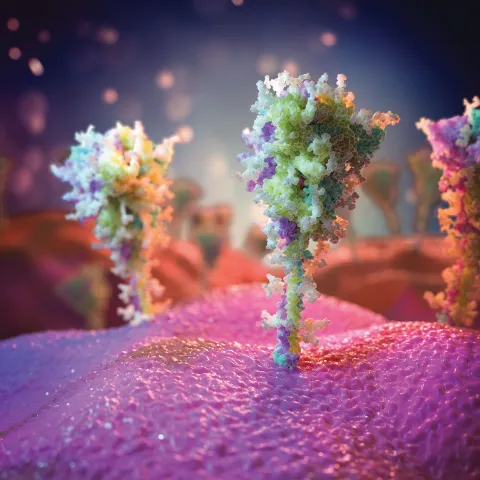

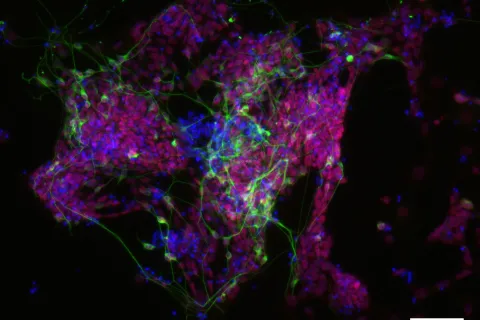

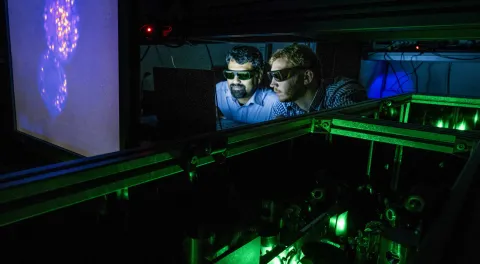

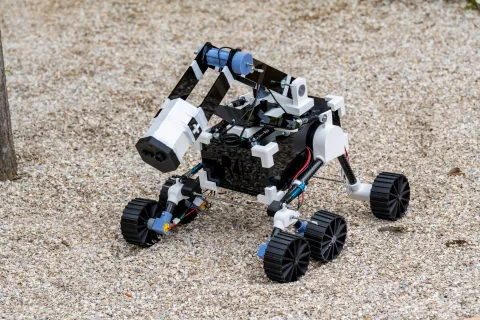

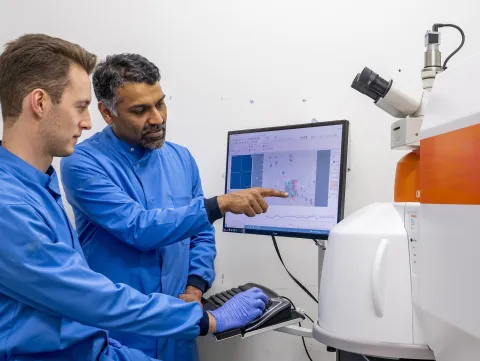

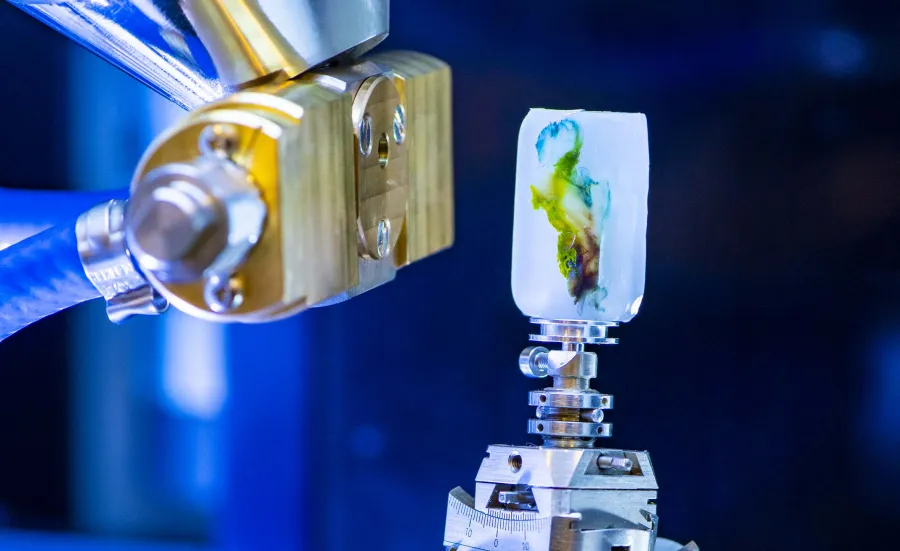

We bring together researchers with expertise across the themes of health and medicine, living systems, disruptive life technologies, and insights through data. We have an established reputation for working collaboratively, taking disruptive approaches and risk through interdisciplinary team science.